Transactional Outbox: How to safely offload work into the background

Modern distributed systems often rely on asynchronous processing to scale operations and improve responsiveness.

While offloading this work to background jobs via message queues seems straightforward, the moment you introduce them alongside a relational database, you’re walking into a classic trap: the dual write problem.

In this article we explore the failure modes of naïve implementations, demonstrate the elegance of the Transactional Outbox pattern, and discuss how to scale it with modern tooling.

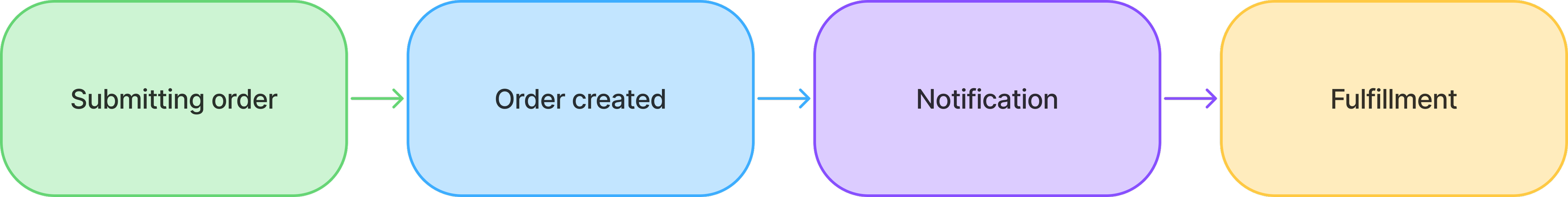

For the purpose of this article lets consider a basic e-commerce setup: where after the user places an order via an API call, the system then continues processing that by sending notifications and starting the order fulfilment.

Doing It Wrong: A Dual Write Dilemma

A common, but incorrect approach looks like the following:

@router.post('/orders')

async def create_order(order):

order_created = await save_to_database(order)

order_created_event = ...

await publish_event(order_created_event)

return order_created

The API endpoint accepts an incoming order, saves it in the database and publishes an event to Kafka or to a Redis queue and returns the response. A background worker picks up the event and continues with the email notification and the order fulfilment processing.

Seems innocent enough, but what happens if the database write succeeds and the event publishing fails? You are left with an inconsistent state where your order is accepted but not processed.

A common suggestion to fix situation is: “Just commit to the database after you published the event”. Congratulations, you now simply reversed the failure case. If the database write fails, you have emitted an event for an order not existing in your system. Your workers are processing ghost orders!

Using The Transactional Outbox Pattern

The core problem of the proposed solution is the dual write. Writing into two systems as an atomic transaction is not possible in a non durable execution environment like an API call.

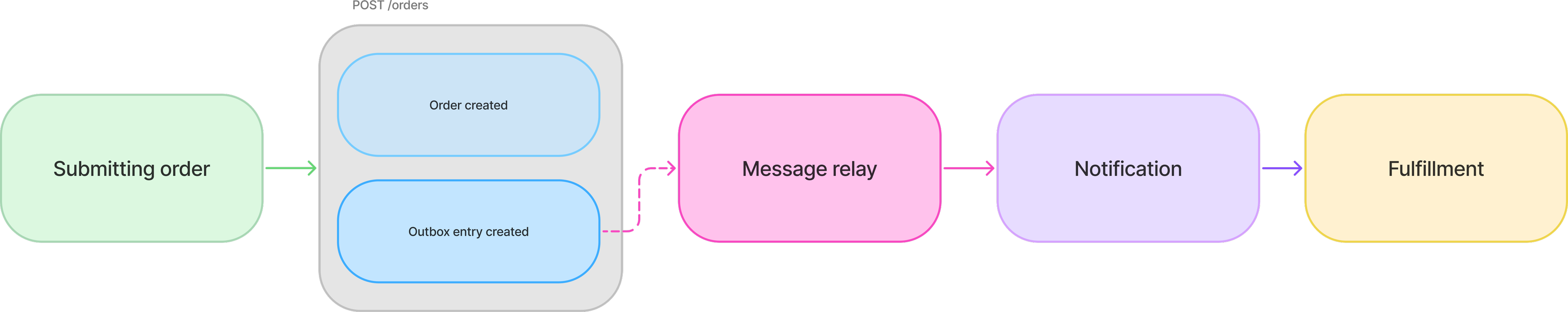

The solution is simple - avoid writing into two system in the API call altogether.

The transactional outbox pattern is a simple but effective way to guarantee that messages are only published if the database transaction commits. We achieve this by not publishing the message directly but rather writing it to a event table as part of your transaction. From there a message relay system - a background worker - is picking up the entry and publishing it to your messaging platform.

The API endpoint implementation looks like this:

@router.post('/orders')

async def create_order(order):

async with get_database_tranaction() as tx:

order_created = await save_to_database(order)

order_created_event = ...

await publish_event_to_outbox(order_created_event)

return order_created

which effectively translates to the following SQL statements:

BEGIN;

INSERT INTO orders (...) VALUES (...);

INSERT INTO event_outbox (event_type, payload) VALUES ('order_created', '{...}');

COMMIT;

Implementing the message relay

The obvious next question is how do you implement the message relay. There are multiple options to chose from, each with their own advantages, so choose wisely for your current situation.

Simple: cronjob polling

A simple scheduled job looks up the table for outstanding events in a fixed interval. It is advised to limit (e.g. 50) how many events at maximum each iteration can be processed.

After publishing the messages, you can mark the database entries as processed. If you fail to publish a message, a future iteration will pick up the same records and retry it for you. But be aware and monitor how many records are picked up from the database and how many are successfully published. If you are always picking up more events than you are successfully publishing, you build a backlog or worst case have a fault event that blocks all events after it from being published.

This options shines through its simplicity to implement, and it most suitable to get started. It lacks in terms of throughput and lag as its throughput is constant depending on the interval and the number of limit of records, and the lag introduced depending on the polling interval. Example: It polls every 30 seconds for up to 100 records, means your max throughput is 200 records per minute and your worst case lag is 30 seconds.

Since events might be retried it must also be pointed out that your message processing must be able to handle idempotency as messages would be delivered at least once.

Advanced: Leveraging Change Data Capture (CDC)

With CDC, we can hook into the database’s transaction log, e.g. PostgreSQLs Write Ahead Log (WAL) or MySQLs Binary Log, and stream changes in real time.

In PostgreSQL you can create a logical replication slot, by using the pg_create_logical_replication_slot command, leveraging the wal2json plugin.

SELECT pg_create_logical_replication_slot('outbox_replication', 'wal2json');

With the select commands

SELECT pg_logical_slot_peek_changes('outbox_replication', NULL, NULL, 'include-tables', 'event_outbox', 'actions', 'insert') // peek into changes

SELECT pg_logical_slot_get_changes('outbox_replication', NULL, NULL, 'include-tables', 'event_outbox', 'actions', 'insert') // consume changes

we can peek and check for new entries and then consume them with pg_logical_slot_get_changes.

With this we can replace our scheduled poller into a more dynamic solution that has an improved throughput as shown in this pseudo code:

async def run():

while not_interrupted:

has_events = await peek_for_outbox_events()

if not has_events:

asyncio.sleep(1)

events = await get_outbox_events(max_events=10)

for event in events:

try:

await publish(event)

except:

await dlq(event)

This way we optimised our scheduled job, for a better throughput an less lag. But when changes are consumed from the replication slot, they are no longer available in the next run, so any failures have to be put into a dead-letter-queue to be retried at a later stage.

This option comes with an increased operationally complexity and requires the understanding of replications slots. To reduce complexity managed CDC solutions like AWS DMS, Google DataStream, Artie or the open source variant Debezium can be used.

I personally only tried out AWS DMS and can recommend it, but it is advised to keep an eye on the DMS monitoring of how many records are processed, to ensure everything works as expected. An even better approach would be to monitor inserted records and CDC processed records as metrics as diverging values will directly make you aware of some issues in the system.

Conclusion

The Transaction outbox pattern elegantly sidesteps the dual write problem by ensuring atomicity between state changes and event emission. While it's not a silver bullet, it's a reliable and well-understood solution to one of the fundamental problems in distributed systems.

If you're building systems that need to be both responsive and reliable, this pattern should be a foundational part of your architecture.

Happy building and remember, build your system for failure!